Reflection is a natural and important part of our lives that helps us process our world, in which we engage with the memories of past experiences. This activity can lead to improvements in work, education, and health and wellbeing. But reflection isn’t necessarily straightforward, it can be difficult to make sense of our past experiences, and it isn’t spontaneous, people need to prepare to reflect. Many technologies have been developed to help us reflect, activity trackers are good examples because they can help us see improvements on our health over time, and may encourage us to continue exercising. However, we don’t know much about tangible objects to help us prepare to reflect on our daily life, and this area is not currently served by consumer products. The Tangible Feelings project presents a new view on reflection through a tangible technology that helps people prepare for reflection.

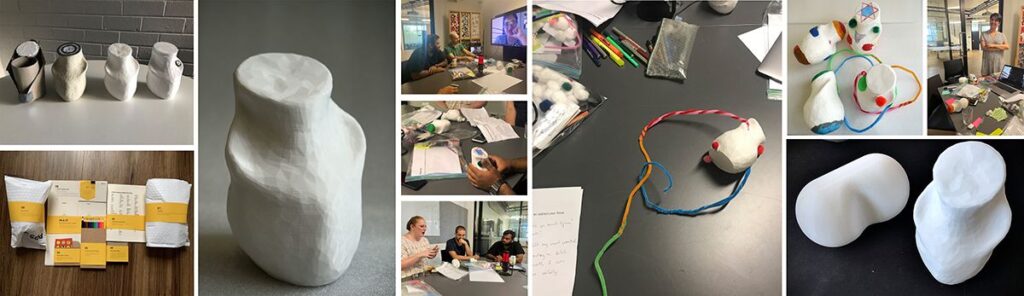

To study reflection, we developed two technology probes, small prototypes that are given to users for research, which allow us to understand how they chose to interact with the device. The devices are small, held in both hands, and have an organic form, which was 3D printed from a scanned model. One technology probe was given to users to understand how biofeedback provides a point of focus for reflection. Another was given to designers to act as a blank 3D printed object, to which they could propose modifications. Both groups lived with their technology probe, and provided us with information through interviews, journals and a workshop, to tell us about how the design can be improved or augmented in the future.

People

- Dr Phillip Gough

- Dr Baki Kocaballi (UTS)

- Khushnood Naqshbandi

- Karen Cochrane (Carleton University)

- Kristina Mah

- Ajit Pillai

- Ainnoun Kornita Deny (UTS)

- Yeliz Yorulmaz (Independent Artist)

- Dr Naseem Ahmadpour

Publications

- Gough P, Kocaballi, A. B., Naqshbandi, K., Cochrane, K., Mah, K., Pillai, A.,… Ahmadpour N. (2021). Co-designing a Technology Probe with Experienced Designers. In proceedings of Australian Computer-Human Interaction conference (OzCHI’21), ACM.