As autonomous robots increasingly integrate into our urban landscapes, navigating shared spaces and closely interacting with people, they present significant challenges. It is essential to cultivate improved communication and enhanced understanding between humans and robots. However, the inherent complexity and opaqueness of AI technology create substantial obstacles. Pedestrians often find it hard to comprehend the decision-making processes occurring within these technological ‘black boxes.’ This lack of understanding hinders seamless co-navigation and can foster distrust and resistance towards robots. Thus, our project sets out to explore how visualising an autonomous robot’s internal operational information can influence pedestrian trust and understanding.

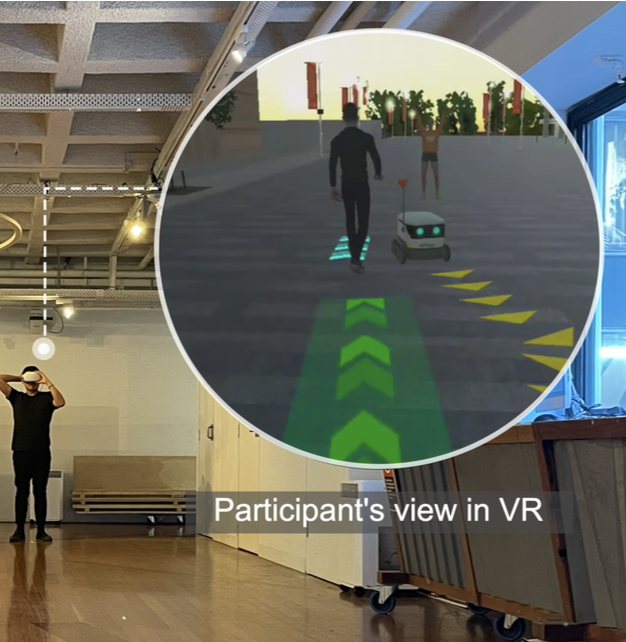

Through an iterative process and consolidating feedback from two expert focus groups, we developed an augmented reality (AR) design concept. This concept includes the visualisation of the algorithm-calculated predicted path of pedestrians, concurrent with the robot’s navigation intent. In a virtual reality (VR) experiment, we prototyped and compared this concept against two conditions: a baseline without visualisation and one displaying only the robot’s navigation intent.to visualise pedestrian’s predicted path alongside the robot’s navigation intent.

Our findings indicate that the visualisation of path predictions significantly boosts people’s trust in the robot. Further, a detailed triangulation of quantitative and qualitative data underscores the positive impact of visualising pedestrian path predictions on robot understandability. Interestingly, we also discovered that these visualisations encourage exploratory behaviours among people interacting with these robots.

People

- Xinyan Yu

- Marius Hoggenmüller

- Martin Tomitsch

Publications

- Xinyan Yu, Marius Hoggenmüller, and Martin Tomitsch. 2023. Your Way Or My Way: Improving Human-Robot Co-Navigation Through Robot Intent and Pedestrian Prediction Visualisations. In Proceedings of the 2023 ACM/IEEE International Conference on Human-Robot Interaction (HRI ’23).