Almohannad Albastaki – [icon name=”globe” prefix=”fas”] [icon name=”instagram” prefix=”fab”]

In the world of human-robot interaction, efforts have been placed towards how robots may express state, be it functional or emotional. Studies on humanoid robots have employed facial expressions, speech and bodily movements. Humans often see the limitations of these systems and overcoming the uncanny valley is a difficult task.

With regards to non-humanoid robots, other modalities are investigated such as light, movement, and sound. In-lab studies often employed emotional models to guide their designs. Results have confirmed the accuracies of expressions. However, a recent in-the-wild study pointed towards the issues of utilising these models. Some emotions were seen an unnecessary and these models don’t facilitate for a behaviour that is relative to the shape and function of a robot.

This research-through-design study looks to address this gap by investigating how a combination of functional and emotional expression may be designed for non-humanoid robots, with light and sound, using “Woodie”, a ludic urban robot, as the agent.

These designs were prototyped using a virtual environment for testing. Eight experts were invited to evaluate the designs through semi-structured interviews. Expressions were indicative of emotional and functional state. Woodie was associated with various living things, giving credit to sound and awareness as contributing factors. Having a sense of directionality highly influenced interactivity.

By adapting a functional framework and using insights from previous work on robotic expression, we show how carefully designing expressions to be both functional and emotional is effective at giving the robot a behaviour and hence anthropomorphising it. We suggest a design framework that combines the two forms of expressions and detail some considerations when employing this design process. In light of the pervasiveness of urban robots in public spaces, this framework presents itself as a viable strategy for designing expressive robots for the sake of affective human-robot interaction.

Images

Download and play with the prototype (MacOS only)

Steps to download:

- Press “Download” at the top right corner and “Direct Download” to download a compressed folder.

- Use The Unarchiver from the App Store to extract the files. Using Archive Utility (the default extraction app for MacOS) will corrupt the executable application.

- Double click “WoodieMacApp” to open the prototype. If the app is not executing due to Security issues, Go to System Preferences -> Security & Privacy. Under “Allow apps downloaded from:” click “Open Anyways”

https://www.dropbox.com/sh/yaqzjllkr9ybgsb/AABOoIoTaxCF_iRowfczWGDpa?dl=0

Controls:

- “WASD” to move around, W – Forward, A – Stride left, S – Backwards, D – Stride right

- Use mouse to change look direction

- “Escape” to move mouse around freely

- “CMD+F” to exit full screen

Notes:

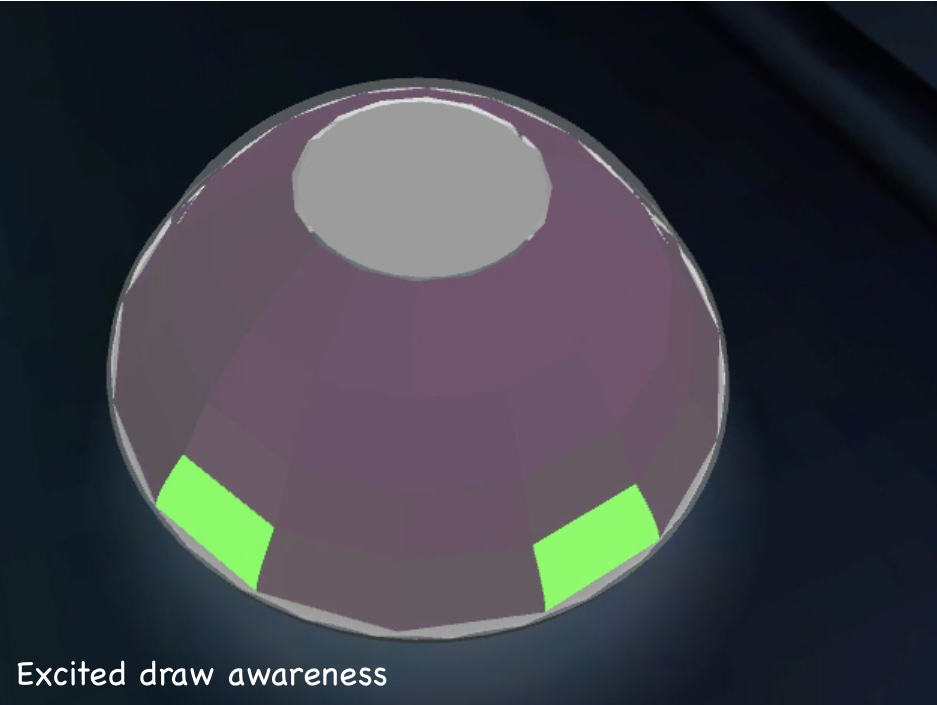

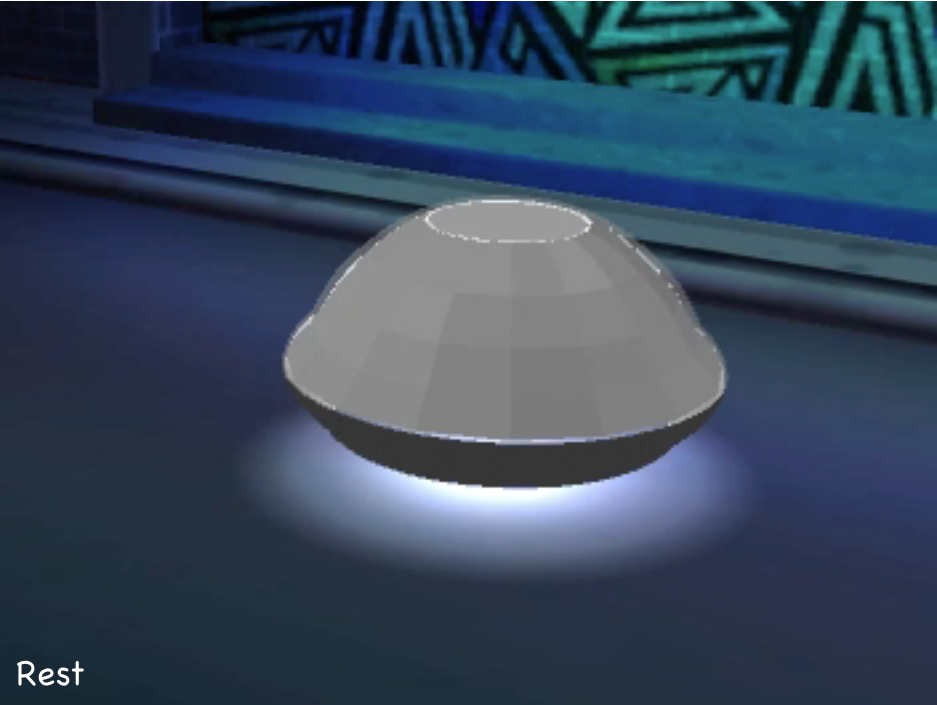

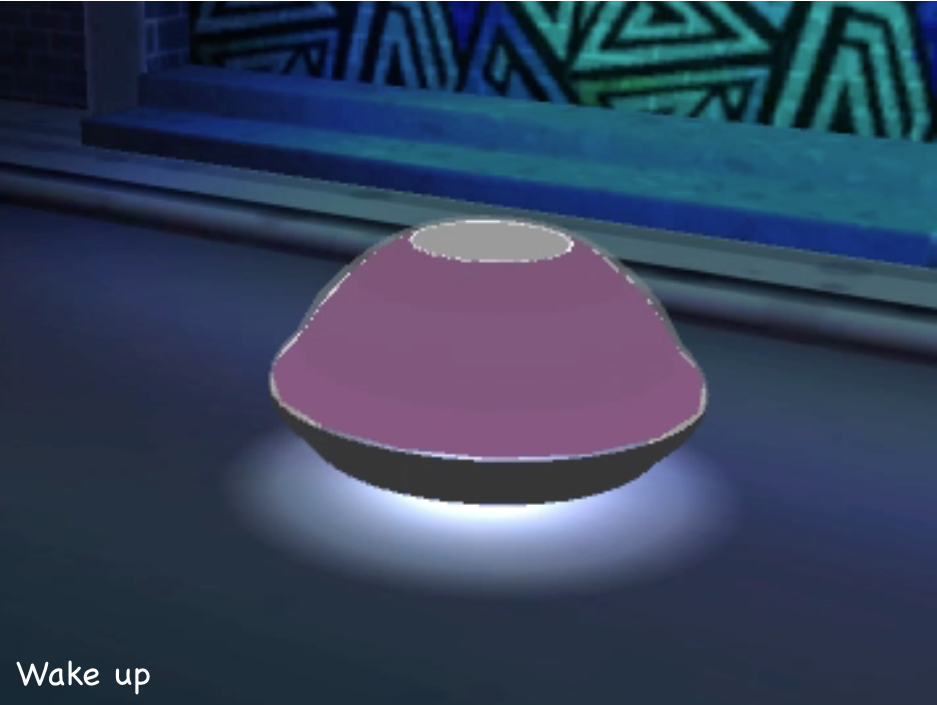

- Woodie moves around freely, switching between states, where the visual expressions changes and a sound is emitted. Sound will be clearer when nearer to Woodie. There are no clickable items in the virtual environment. The only point of interaction is by moving in front of Woodie (signified by the eyes).

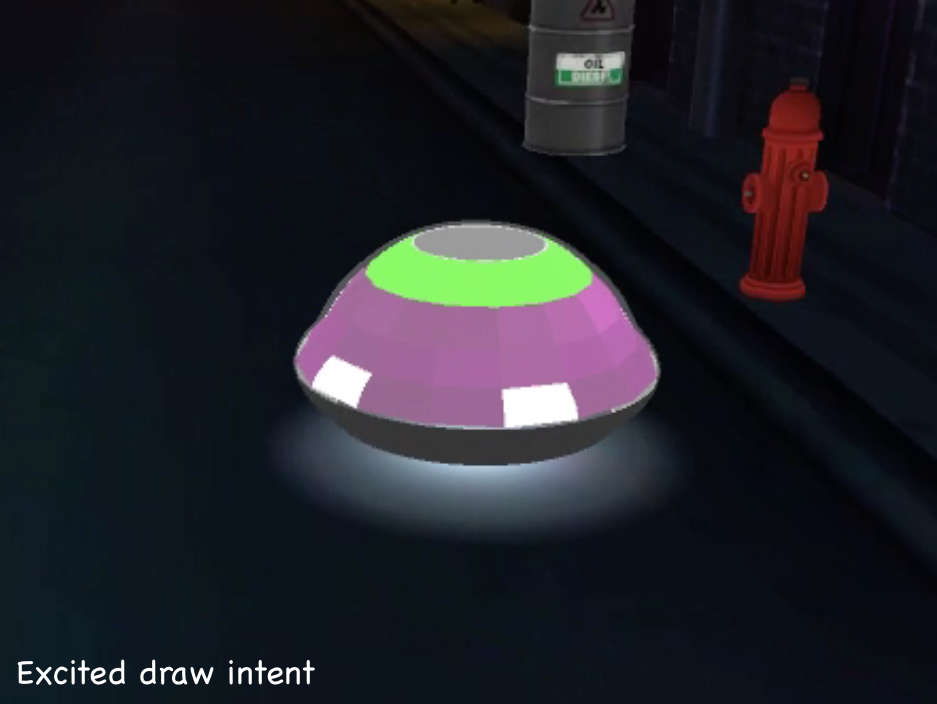

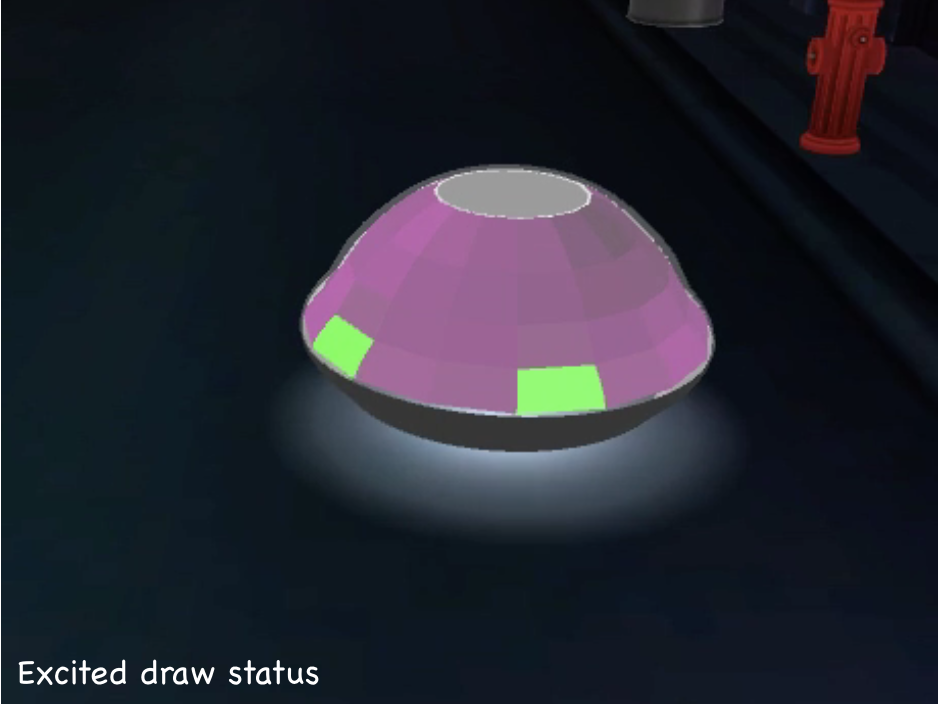

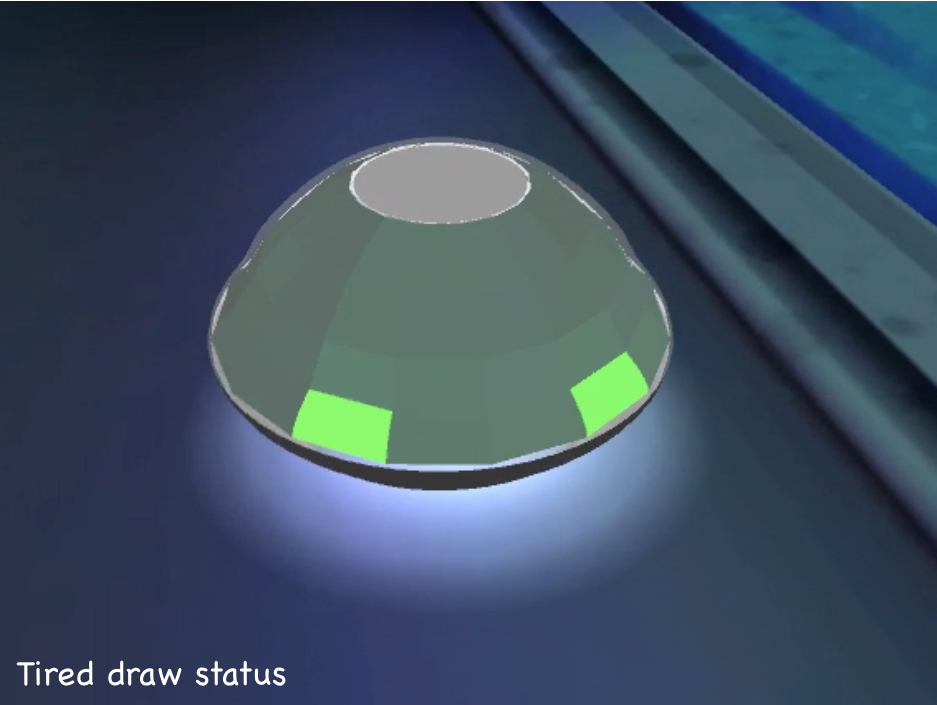

- Woodie goes through a cycle. It starts in an excited state (bright pink) where its moves (white eyes), shows an intent to draw (green halo), then starts drawing (green eyes). Then it changes into a tired states (drab green) where it will move, show an intent to draw, then starts drawing. Finally it goes into a resting states. After some time it will wake up and repeat the cycle. At any moment, except for rest and wake up states, an awareness interaction may be triggered by walking in front of Woodie.

- Please note: Woodie does not actually begin to draw in this prototype, this is simply presented through the draw expression.